The Challenges of Data Migration: Ensuring Smooth Transitions Between Systems

Read More

Author: Ainsley Lawrence

Read More

Author: Ainsley Lawrence

In the AI era, organizations are eager to harness innovation and create value through high-quality, relevant data. Gartner, however, projects that 80% of data governance initiatives will fail by 2027. This statistic underscores the urgent need for robust data platforms and governance frameworks. A successful data strategy outlines best practices and establishes a clear vision for data architecture, […]

The post Technical and Strategic Best Practices for Building Robust Data Platforms appeared first on DATAVERSITY.

Read More

Author: Alok Abhishek

The retail world is constantly evolving, and in this fast-paced environment, understanding your customer is more important than ever. It’s no longer just about making a sale; it’s about creating a journey that turns casual shoppers into loyal customers. With the rise of advanced retail data tools, businesses can now dig deep into customer preferences and behaviors […]

The post How Retail Data Products Are Changing Customer Journey Mapping appeared first on DATAVERSITY.

Read More

Author: Mridula Dileepraj Kidiyur

Modern data management requires a variety of technologies and tools to support the people responsible for ensuring that data is trustworthy and secure. Conquering the data challenge has led to a massive number of vendors offering solutions that promise to solve data issues.

With the evolving vendor landscape, it can be difficult to know where to start. It can also be difficult to understand how to determine the best way to evaluate vendors to be sure you’re seeing a true representation of their capabilities—not just sales speak. When it comes to data intelligence, it can be difficult to even define what that means to your business.

With budgets continuously stretched even thinner and new demands placed on data, you need data technologies that meet your needs for performance, reliability, manageability, and validation. Likewise, you want to know that the product has a strong roadmap for your future and a reputation for service you can count on, giving you the confidence to meet current and future needs.

Independent Assessments Are Key to Informing Buying Decisions

Independent analyst reports and buying guides can help you make informed decisions when evaluating and ultimately purchasing software that aligns with your workloads and use cases. The reports offer unbiased, critical insights into the advantages and drawbacks of vendors’ products. The information cuts through marketing jargon to help you understand how technologies truly perform, helping you choose a solution with confidence.

These reports are typically based on thorough research and analysis, considering various factors such as product capabilities, customer satisfaction, and market performance. This objectivity helps you avoid the pitfalls of biased or incomplete information.

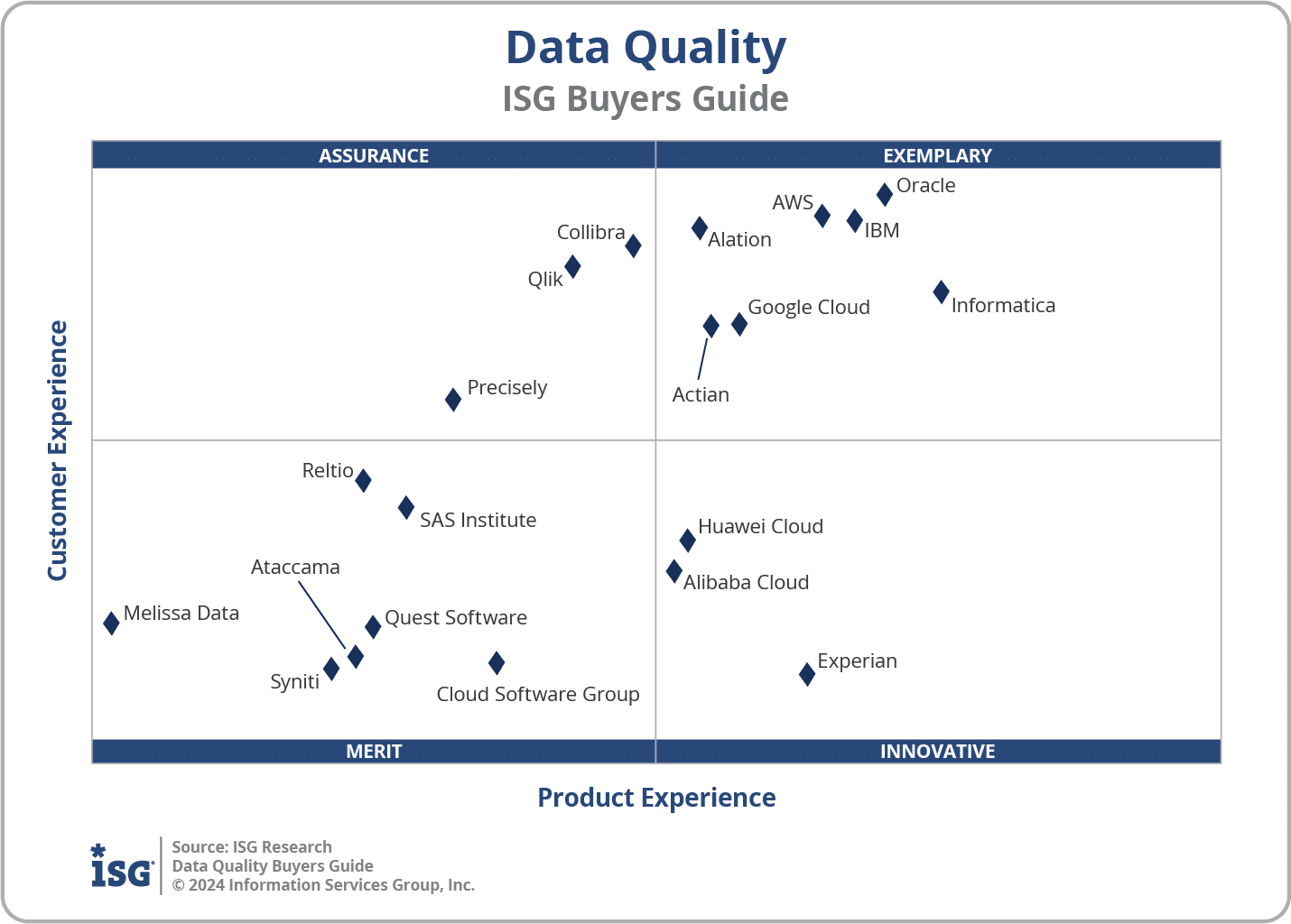

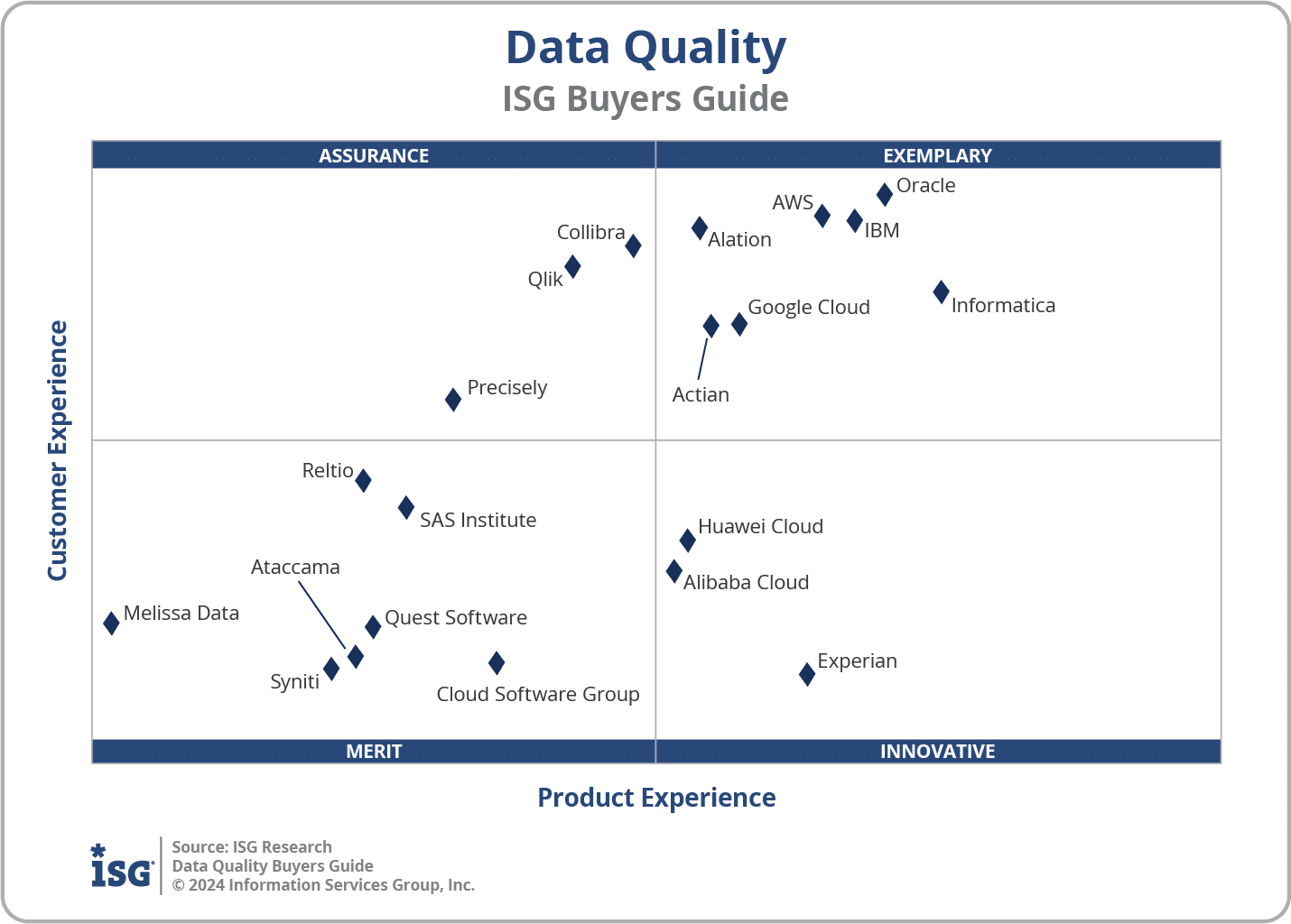

For example, the 2024 Buyers Guide for Data Intelligence by ISG Research, which provides authoritative market research and coverage on the business and IT aspects of the software industry, offers insights into several vendors’ products. The guide offers overall scoring of software providers across key categories, such as product experience, capabilities, usability, ROI, and more.

In addition to the overall guide, ISG Research offers multiple buyers guides that focus on specific areas of data intelligence, including data quality and data integration.

ISG Research Market View on Data Intelligence

Data intelligence is a comprehensive approach to managing and leveraging data across your organization. It combines several key components working seamlessly together to provide a holistic view of data assets and facilitate their effective use.

The goal of data intelligence is to empower all users to access and make use of organizational data while ensuring its quality. As ISG Research noted in its Data Quality Buyers Guide, the data quality product category has traditionally been dominated by standalone products focused on assessing quality.

“However, data quality functionality is also an essential component of data intelligence platforms that provide a holistic view of data production and consumption, as well as products that address other aspects of data intelligence, including data governance and master data management,” according to the guide.

Similarly, ISG Research’s Data Integration Buyers Guide notes the importance of bringing together data from all required sources. “Data integration is a fundamental enabler of a data intelligence strategy,” the guide points out.

Companies across all industries are looking for ways to remove barriers to easily access data and enable it to be treated as an important asset that can be consumed across the organization and shared with external partners. To do this effectively and securely, you must consider various capabilities, including data integration, data quality, data catalogs, data lineage, and metadata management solutions.

These capabilities serve as the foundation of data intelligence. They streamline data access and make it easier for teams to consume trusted data for analytics and business intelligence that inform decision making.

ISG Research Criteria for Choosing Data Intelligence Vendors

ISG Research notes that software buying decisions should be based on research. “We believe it is important to take a comprehensive, research-based approach, since making the wrong choice of data integration technology can raise the total cost of ownership, lower the return on investment and hamper an enterprise’s ability to reach its full performance potential,” according to the company.

In the 2024 Data Intelligence Buyers Guide, ISG Research evaluated software and presented findings in key categories that are important to modern businesses. The evaluation offers a framework that allows you to shorten the cycle time when considering and purchasing software.

For example, ISG Research encourages you to follow a process to ensure the best possible outcomes by:

As ISG Research points out in its buyers guide, all the products it evaluated are feature-rich. However, not all the capabilities offered by a software provider are equally valuable to all types of users or support all business requirements needed to manage products on a continuous basis. That’s why it’s important to choose software based on your specific and unique needs.

Buy With Confidence

It can be difficult to keep up with the fast-changing landscape of data products. Independent analyst reports help by enabling you to make informed decisions with confidence.

Actian is providing complimentary access to the ISG Research Data Quality Buyers Guide that offers a detailed software provider and product assessment. Get your copy to find out why Actian is ranked in the “Exemplary” category.

If you’re looking for a single, unified data platform that offers data integration, data warehousing, data quality, and more at unmatched price-performance, Actian can help. Let’s talk.

The post Key Insights From the ISG Buyers Guide for Data Intelligence 2024 appeared first on Actian.

Companies are dealing with more data sources than ever – sales figures, customer profiles, inventory updates, you name it. Data professionals say, on average, data volumes are growing by 63% per month in their organizations. Data teams are struggling to ensure all that data hangs together across systems and is accurate and consistent. Bad data is bad […]

The post Charting a Course Through the Data Mapping Maze in Three Parts appeared first on DATAVERSITY.

Read More

Author: Eric Crane

Data sprawl has emerged as a significant challenge for enterprises, characterized by the proliferation of data across multiple systems, locations, and applications. This widespread dispersion complicates efforts to manage, integrate, and extract value from data. However, the rise of data fabric and the integration of Platform-as-a-Service (iPaaS) technologies offers a promising solution to these challenges […]

The post Data Sprawl: Continuing Problem for the Enterprise or an Untapped Opportunity? appeared first on DATAVERSITY.

Read More

Author: Kaycee Lai

In today’s data-driven business landscape, the quality of an organization’s data has become a critical determinant of its success. Accurate, complete, and consistent data is the foundation upon which crucial decisions, strategic planning, and operational efficiency are built. However, the reality is that poor data quality is a pervasive issue, with far-reaching implications that often go unnoticed or underestimated.

Before delving into the impacts of poor data quality, it’s essential to understand what constitutes subpar data. Inaccurate, incomplete, duplicated, or inconsistently formatted information can all be considered poor data quality. This can stem from various sources, such as data integration challenges, data capture inconsistencies, data migration pitfalls, data decay, and data duplication.

Addressing poor data quality requires a multi-faceted approach encompassing organizational culture, data governance, and technological solutions.

The risks and costs associated with poor data quality are far-reaching and often underestimated. By recognizing the hidden impacts, quantifying the financial implications, and implementing comprehensive data quality strategies, organizations can unlock the true value of their data and position themselves for long-term success in the digital age.

The post The Costly Consequences of Poor Data Quality: Uncovering the Hidden Risks appeared first on Actian.

Read More

Author: Traci Curran

In today’s data-driven business landscape, the quality of an organization’s data has become a critical determinant of its success. Accurate, complete, and consistent data is the foundation upon which crucial decisions, strategic planning, and operational efficiency are built. However, the reality is that poor data quality is a pervasive issue, with far-reaching implications that often go unnoticed or underestimated.

Before delving into the impacts of poor data quality, it’s essential to understand what constitutes subpar data. Inaccurate, incomplete, duplicated, or inconsistently formatted information can all be considered poor data quality. This can stem from various sources, such as data integration challenges, data capture inconsistencies, data migration pitfalls, data decay, and data duplication.

Addressing poor data quality requires a multi-faceted approach encompassing organizational culture, data governance, and technological solutions.

The risks and costs associated with poor data quality are far-reaching and often underestimated. By recognizing the hidden impacts, quantifying the financial implications, and implementing comprehensive data quality strategies, organizations can unlock the true value of their data and position themselves for long-term success in the digital age.

The post The Costly Consequences of Poor Data Quality: Uncovering the Hidden Risks appeared first on Actian.

Read More

Author: Traci Curran

We are pleased to announce that data profiling is now available as part of the Actian Data Platform. This is the first of many upcoming enhancements to make it easy for organizations to connect, manage, and analyze data. With the introduction of data profiling, users can load data into the platform and identify focus areas, such as duplicates, missing values, and non-standard formats, to improve data quality before it reaches its target destination.

Data quality is the cornerstone of effective data integration and management. High-quality data enhances business intelligence, improves operational efficiency, and fosters better customer relationships. Poor data quality, on the other hand, can result in costly errors, compliance issues, and loss of trust.

Actian’s enhanced Data Quality solutions empower you to transform raw data into a strategic asset. Ensuring your data is accurate, reliable, and actionable can drive better business outcomes and gain a competitive edge.

Don’t let poor data quality hold your business back. Discover how Actian’s enhanced Data Quality solutions can help you achieve your data management goals. Visit our Data Quality page to learn more and request a demo.

Follow us on social media and subscribe to our newsletter for the latest updates, tips, and success stories about data quality and other Actian solutions.

The post Introducing Actian’s Enhanced Data Quality Solutions: Empower Your Business with Accurate and Reliable Data appeared first on Actian.

Read More

Author: Traci Curran

Chief Data Officers (CDOs) and Chief Information Officers (CIOs) play critical roles in navigating the complexities of modern data environments. As data grows exponentially and spans across cloud, on-premises, and various SaaS applications, the challenge of integrating and managing this data becomes increasingly daunting. In the guide Top 5 Data Integration Use Cases, we explore five key data integration use cases that empower business users by enabling seamless access, consolidation, and analysis of data. These use cases highlight the significance of robust data integration solutions in driving efficiency, informed decision-making, and overall business success.

Modern organizations face significant data integration challenges due to the exponential growth of cloud-based data. With the surge in projects fueled by cloud computing, IoT, and sophisticated ecosystems, there is an intensified pressure on data integration initiatives. Effective data integration strategies are necessary to leverage data and other technologies across multiple platforms such as SaaS applications, cloud-based data warehouses, and internal systems.

As digital transformations advance, the need for efficient data delivery methods grows, encompassing both on-premises and cloud-based endpoints. Integration capabilities provided as a service have emerged as a robust solution to meet the evolving demands of modern data integration. The widespread adoption of SaaS applications among line-of-business (LOB) users is a significant driver for cloud-based integration solutions. Business users require a straightforward way to exchange data across various SaaS applications, often without IT’s involvement.

However, integrating data stored in apps and enterprise systems typically necessitates IT assistance, creating barriers to data access and causing blind spots in both on-premises and cloud data. Enterprise systems hold crucial data that can provide insights into customer interactions, payments, support issues, and other business areas. Yet, this data is often isolated and safeguarded as mission-critical assets.

For effective integration, a solution is needed that enables secure information sharing with all users, independent of engineering or IT resources. Empowering LOBs to access and integrate data securely and independently helps avoid delays and bottlenecks associated with traditional integration methods. Ensuring critical information is easily accessible to all employees is essential for maintaining a competitive advantage, adapting swiftly to evolving business conditions, and building a data-driven culture.

Below are five typical use cases that can benefit from a modern data platform with self-service data integration:

Data platforms with integration capabilities empower business users to access and leverage data stored in data warehouses, as well as on-premises and cloud-based data. Equipped with pre-built connectors, data quality features, and scheduling functions, these platforms minimize IT involvement. Business users can create tailored integration scenarios, effortlessly retrieving pertinent data from various sources, leading to improved decision-making and valuable insights tailored to user needs.

Integration and automation enhance efficiency and streamline operations. Through system integration and task automation, companies can accelerate data processing and analysis, enabling faster access to information. This saves significant time and allows business users to focus on more strategic endeavors. Automation optimizes workflows, minimizes errors, and ultimately improves operational efficiency.

Integrating CRM systems with marketing automation platforms ensures seamless data flow between sales and marketing teams, optimizing lead management and customer engagement. This integration enhances revenue generation processes and facilitates informed decision-making through real-time tracking and analysis of customer data. By aligning sales and marketing efforts, businesses boost productivity and achieve cohesive goals faster, driving growth and delivering exceptional customer experiences.

Integrating customer data from various touchpoints, such as website interactions, support tickets, and sales interactions, offers a comprehensive understanding of each customer. This holistic view allows marketing teams to personalize activities based on individual customer preferences and behaviors. Integrated data helps identify patterns and trends, maximizing marketing efforts and better controlling budgets. It also enhances customer service, enabling businesses to anticipate and address customer needs effectively.

Integrating operational systems with business intelligence (BI) tools empowers business users to access real-time insights and reports, facilitating data-driven decision-making. Real-time reporting and analytics are indispensable for competitiveness in today’s fast-paced market, allowing businesses to react quickly to market changes and improve customer service with up-to-date information.

Data integration is a strategic necessity for organizations aiming to leverage their data effectively. For CDOs and CIOs, investing in robust data integration solutions is not just about addressing immediate challenges but also about laying the foundation for long-term success. By embracing the use cases outlined above, organizations can empower their teams, streamline operations, and drive sustainable growth. Ultimately, a well-integrated data environment enables leaders to make informed decisions, adapt swiftly to changes, and maintain a competitive edge in the marketplace.

For data leaders dealing with data that resides on-premises, in the cloud, and in hybrid environments, downloading the Top 5 Data Integration Use Cases guide is an essential step towards eliminating data silos.

The post Top 5 Data Integration Use Cases for Data Leaders appeared first on Actian.

Read More

Author: Dee Radh

Consumers and citizens are accustomed to getting instant answers and results from businesses. They expect the same lightning-fast responses from the public sector, too. Likewise, employees at public sector organizations need the ability to quickly access and utilize data—including employees without advanced technical or analytics skills—to identify and address citizens’ needs.

Giving employees the information to meet citizen demand and answer their questions requires public sector organizations to capture and analyze data in real time. Real-time data supports intelligent decision making, automation, and other business-critical functions.

Easily accessible and trusted data can also increase operational effectiveness, predict risk with greater accuracy, and ultimately increase satisfaction for citizens. That data must be secure while still enabling frictionless sharing between departments for collaboration and use cases.

This naturally leads to a pressing question—How can your organization achieve real-time analytics to benefit citizens and staff alike? The answer, at a foundational level, is to implement a modern, high-performance data platform.

Achieving a digital transformation in the public sector involves more than upgrading technology. It entails rethinking how services are delivered, how data is shared, and how your infrastructure handles current and future workloads. Too often in public service organizations, just like with their counterparts in the private sector, legacy systems are limiting the effectiveness of data.

These systems lack the scalability and integration needed to support digital transformation efforts. They also face limitations making trusted data available when and where it’s needed, including availability for real-time data analytics. Providing the data, analytics, and IT capabilities required by modern organizations is only possible with a modern and scalable data platform. This type of platform is designed to integrate systems and operations, capture and share all relevant data to predict and respond quickly to changes, and improve service delivery to citizens.

At the same time, modernization efforts that include a cloud migration can be complex. This is often due to the vast amounts of data that need to be moved to the cloud and the legacy systems entrenched in organizational processes. That’s why you need a clear and proven strategy and to work with an experienced vendor to make the transition seamless while ensuring data quality.

Hybrid cloud data platforms have emerged as a proven solution for integrating and sharing data in the public sector. By combining on-premises infrastructure with cloud-based services, these platforms offer the flexibility, scalability, and capability to manage, integrate, and share large data volumes.

Another benefit of hybrid solutions is that they allow organizations to optimize their on-premises investments while keeping costs from spiraling out of control in the cloud—unlimited scaling in the cloud can have costs associated with it. Public sector organizations can use a hybrid platform to deliver uninterrupted service, even during peak times or critical events, while making data available in real time for analytics, apps, or other needs.

Smart decision-making demands accurate, trustworthy, and integrated data. This means that upstream, you need a platform capable of seamlessly integrating data and adding new data pipelines—without relying on IT or advanced coding.

Likewise, manual processes and IT intervention will quickly bog down an organization. For example, when a social housing team needs data from multiple systems to ensure buildings meet safety regulations, accessing and analyzing the information might take days or weeks—with no guarantee the data is trustworthy. Automating the pipelines reduces time to insights and ensures data quality measures are in place to catch errors and duplication.

Data integration is essential to breaking down data silos, providing deeper context and relevancy to data, and ensuring the most informed decisions possible. For example, central government agencies can use the data to drive national policies while identifying issues and needs, and strategically allocating resources.

Moving from legacy systems to a modern platform and migrating to the cloud at a pace your organization is comfortable with enables a range of benefits:

With a solution like the Actian Data Platform, you can do even more. For example, the platform lets you easily connect, transform, and manage data. The data platform enables real-time data access at scale along with real-time analytics. Public sector organizations can benefit, for instance, by using the data to craft employee benefits programs, housing policies, tax guidelines, and other government programs.

The Actian Data Platform can integrate into your existing infrastructure and easily scale to meet changing needs. The platform makes data easy to use so you can better predict citizen needs, provide more personalized services, identify potential problems, and automate operations.

Taking a modern approach to data management, integration, and quality, along with having the ability to process, store, and analyze even large and complex data sets, allows you to digitally transform faster and be better positioned for intelligent decision making. As the public sector strives to effectively serve the needs of the public in a cost-effective, sustainable, and responsible way, data-driven decision-making will play a greater role for all stakeholders.

The path toward an effective and responsive public sector lies in the power of data and a modern data platform. Our new eBook “Accelerate a Digital Transformation in the UK Public Sector” explains why a shift from legacy technologies to a modern infrastructure is essential for today’s organizations. The eBook shares how local councils and central government organizations can balance the need to modernize with maximizing investments in current on-prem systems, meeting the changing needs of the public, and making decisions with confidence.

The post Leverage Real-Time Analytics for Smarter Decision-Making in Public Services appeared first on Actian.

Read More

Author: Tim Williams

The guide “8 Key Reasons to Consider a Hybrid Data Integration Solution” delves deeply into the complexities of managing vast and diverse data environments, particularly emphasizing the merits for data engineers working with on-premises data systems. This comprehensive guide illustrates how hybrid data integration not only addresses existing challenges but also prepares organizations for future data management needs.

One of the primary challenges highlighted in the guide is data fragmentation. Organizations today often operate on a mix of on-premises and cloud-based systems, leading to isolated data silos that can hinder efficient data utilization and business operations. Hybrid data integration solutions bridge these gaps, enabling seamless communication and data flow between disparate systems. This integration is crucial for supporting robust analytics and business intelligence capabilities, as it ensures that data from various sources can be consolidated into a cohesive, accessible format.

Resource utilization is another significant aspect addressed by hybrid data integration. This approach allows data engineers to optimally allocate computing resources based on specific needs. For example, processing large volumes of data or handling peak loads may be more feasible using cloud resources due to their scalability and cost-effectiveness. Conversely, certain operations might be better suited to on-premises environments due to performance or security considerations. By balancing these resources, organizations can achieve a more efficient and cost-effective operation.

Security and compliance are paramount in data management, especially with the increasing emphasis on data privacy regulations across different regions. Hybrid data integration systems facilitate adherence to such regulations by enabling organizations to store and manage data in a manner that complies with local data sovereignty laws. Furthermore, these systems enhance data security through advanced measures such as encryption and detailed access controls, ensuring that sensitive information is protected across all environments.

Business agility is greatly enhanced through hybrid data integration. The flexibility provided by these systems allows organizations to swiftly adapt to changing business conditions and data requirements. Whether scaling up operations to meet increased demand or integrating new technologies, hybrid solutions offer the agility needed to respond effectively to dynamic market conditions. This adaptability is crucial for maintaining competitiveness and operational efficiency.

Cost management is another benefit of hybrid data integration. By leveraging the specific advantages of both on-premises and cloud environments, organizations can optimize their operational expenditures. Cloud environments, for instance, allow for scaling resources up or down based on current needs, which can significantly reduce costs during periods of low demand. Conversely, maintaining critical operations on-premises can mitigate risks associated with third-party services and fluctuating cloud pricing models.

The guide also discusses the importance of digital ecosystems and the integration of services from various external partners. Hybrid data integration supports these efforts by enabling seamless data exchanges and collaborations, thereby expanding business capabilities and facilitating entry into new markets or sectors.

Self-service integration capabilities are emphasized as a key feature of modern hybrid systems. These allow business users and departmental staff to manage their integration tasks directly, which speeds up processes and enhances overall business agility. The empowerment of non-specialist users to perform complex integrations democratizes data handling and accelerates decision-making processes.

Lastly, the guide touches on the future-proof nature of hybrid data integration solutions. As technologies evolve, organizations equipped with hybrid systems can easily integrate new tools and platforms without needing to overhaul their existing data infrastructure. This readiness not only protects the organization’s investment in technology but also ensures it can continuously adapt to the latest innovations and industry standards.

In conclusion, hybrid data integration offers a versatile and strategic solution for managing complex data environments, particularly benefiting organizations that manage a blend of on-premises and cloud-based data systems. Actian Data Platform, a hybrid data integration solution addresses the multifaceted challenges presented throughout the guide. For data engineers dealing with data that resides on-premises, in the cloud, and in hybrid environments, downloading the “8 Key Reasons to Consider a Hybrid Data Integration Solution” guide is an essential step towards developing a future-proof data strategy.

The post 8 Key Reasons to Consider a Hybrid Data Integration Solution appeared first on Actian.

Read More

Author: Dee Radh

In the healthcare industry, surgical capacity management is one of the biggest issues organizations face. Hospitals and surgery centers must be efficient in handling their resources. The margins are too small for waste, and there are too many patients in need of care. Data, particularly real-time data, is an essential asset. But it is only […]

The post Overcoming Real-Time Data Integration Challenges to Optimize for Surgical Capacity appeared first on DATAVERSITY.

Read More

Author: Jeff Robbins

Data integration is a critical capability for any organization looking to connect their data—in an era when there’s more data from more sources than ever before. In fact, data integration is the key to unlocking and sustaining business growth. A modern approach to data integration elevates analytics and enables richer, more contextual insights by bringing together large data sets from new and existing sources.

That’s why you need a data platform that makes integration easy. And the Actian Data Platform does exactly that. It’s why the platform was recently honored with the prestigious “Data Integration Solution of the Year” award from Data Breakthrough. The Data Breakthrough Aware program recognizes the top companies, technologies, and products in the global data technology market.

Whether you want to connect data from cloud-based sources or use data that’s on-premises, the integration process should be simple, even for those without advanced coding or data engineering skill sets. Ease of integration allows business analysts, other data users, and data-driven applications to quickly access the data they need, which reduces time to value and promotes a data-driven culture.

Being recognized by Data Breakthrough, an independent market intelligence organization, at its 5th annual awards program highlights the Actian platform’s innovative capabilities for data integration and our comprehensive approach to data management. With the platform’s modern API-first integration capabilities, organizations in any industry can connect and leverage data from diverse sources to build a more cohesive and efficient data ecosystem.

The platform provides a unified experience for ingesting, transforming, analyzing, and storing data. It meets the demands of your modern business, whether you operate across cloud, on-premises, or in hybrid environments, while giving you full confidence in your data.

With the Actian platform, you can leverage a self-service data integration solution that addresses multiple use cases without requiring multiple products—one of the benefits that Data Breakthrough called out when giving us the award. The platform makes data easy to use for analysts and others across your organization, allowing you to unlock the full value of your data.

The Actian Data Platform offers integration as a service while making data integration, data quality, and data preparation easier than you may have ever thought possible. The recently enhanced platform also assists in lowering costs and actively contributes to better decision making across the business.

The Actian platform is unique in its ability to collect, manage, and analyze data in real time with its transactional database, data integration, data quality, and data warehouse capabilities. It manages data from any public cloud, multi or hybrid cloud, and on-premises environments through a single pane of glass.

All of this innovation will be increasingly needed as more organizations—more than 75% of enterprises by 2025—will have their data in data centers across multiple cloud providers and on-premises. Having data in various places requires a strategic investment in data management products that can span multiple locations and have the ability to bring the data together.

This is another area where the Actian Data Platform delivers value. It lets you connect data from all your sources and from any environment to break through data silos and streamline data workflows, making trusted data more accessible for all users and applications.

The Actian Data Platform also enables you to prep your data to ensure it’s ready for AI and also help you use your data to train AI models effectively. The platform can automate time-consuming data preparation tasks, such as aggregating data, handling missing values, and standardizing data from various sources.

One of our platform’s greatest strengths is its extreme performance. It offers a nine times faster speed advantage and 16 times better cost savings over alternative platforms. We’ve also made recent updates to improve user friendliness. In addition to using pre-built connectors, you can easily connect data and applications using REST- and SOAP-based APIs that can be configured with just a few clicks.

Are you interested in experiencing the platform for yourself? If so, we invite you to participate in a guided free trial. For a limited time, we’re offering a 30-day trial with our team of technical experts. With your data and our expertise, you’ll see firsthand how the platform lets you go from data source to decision quickly and with full confidence.

The post Actian Platform Receives Data Breakthrough Award for Innovative Integration Capabilities appeared first on Actian.

Read More

Author: Actian Corporation

In today’s data-driven world, the demand for seamless data integration and automation has never been greater. Various sectors rely heavily on data and applications to drive their operations, making it crucial to have efficient methods of integrating and automating processes. However, ensuring successful implementation requires careful planning and consideration of various factors.

Data integration refers to combining data from different sources and systems into a unified, standardized view. This integration gives organizations a comprehensive and accurate understanding of their data, enabling them to make well-informed decisions. By integrating data from various systems and applications, companies can avoid inconsistencies and fragmentations often arising from siloed data. This, in turn, leads to improved efficiency and productivity across the organization.

One of the primary challenges in data integration is the complexity and high cost associated with traditional system integration methods. However, advancements in technology have led to the availability of several solutions aimed at simplifying the integration process. Whether it’s in-house development or leveraging third-party solutions, choosing the right integration approach is crucial for achieving success. IT leaders, application managers, data engineers, and data architects play vital roles in this planning process, ensuring that the chosen integration approach aligns with the organization’s goals and objectives.

Before embarking on an integration project, thorough planning and assessment are essential. Understanding the specific business problems that need to be resolved through integration is paramount. This involves identifying the stakeholders and their requirements and the anticipated benefits of the integration. Evaluating different integration options, opportunities, and limitations is also critical. Infrastructure costs, deployment, maintenance efforts, and the solution’s adaptability to future business needs should be thoroughly considered before deciding on an integration approach.

Effective data integration and automation are crucial for organizations to thrive in today’s data-driven world. With the increasing demand for data and applications, it is imperative to prevent inconsistencies and fragmentations. By understanding the need for integration, addressing foundational areas, and leveraging solutions like Actian, organizations can streamline their data integration processes, make informed decisions, and achieve their business objectives. Embracing the power of data integration and automation will pave the way for future success in the digital age.

If you would like more information on how to get started with your next integration project, download our free eBook: 5 Planning Considerations for Successful Integration Projects.

Actian offers a suite of solutions to address the challenges associated with integration. Their comprehensive suite of products covers the entire data journey from edge to cloud, ensuring seamless integration across platforms. The Actian platform provides the flexibility to meet diverse business needs, empowering companies to effectively overcome data integration challenges and achieve their business goals. By simplifying how individuals connect, manage, and analyze data, Actian’s data solutions facilitate data-driven decisions that accelerate business growth. The data platform integrates seamlessly, performs reliably, and delivers at industry-leading speeds.

The post The Importance of Effective Data Integration and Automation in Today’s Digital Landscape appeared first on Actian.

Read More

Author: Traci Curran

With organizations using an average of 130 apps, the problem of data fragmentation has become increasingly prevalent. As data production remains high, data engineers need a robust data integration strategy. A crucial part of this strategy is selecting the right data integration tool to unify siloed data.

Before selecting a data integration tool, it’s crucial to understand your organization’s specific needs and data-driven initiatives, whether they involve improving customer experiences, optimizing operations, or generating insights for strategic decisions.

Begin by gaining a deep understanding of the organization’s business objectives and goals. This will provide context for the data integration requirements and help prioritize efforts accordingly. Collaborate with key stakeholders, including business analysts, data analysts, and decision-makers, to gather their input and requirements. Understand their data needs and use cases, including their specific data management rules, retention policies, and data privacy requirements.

Next, identify all the sources of data within your organization. These may include databases, data lakes, cloud storage, SaaS applications, REST APIs, and even external data providers. Evaluate each data source based on factors such as data volume, data structure (structured, semi-structured, unstructured), data frequency (real-time, batch), data quality, and access methods (API, file transfer, direct database connection). Understanding the diversity of your data sources is essential in choosing a tool that can connect to and extract data from all of them.

Consider the volume and velocity of data that your organization deals with. Are you handling terabytes of data per day, or is it just gigabytes? Determine the acceptable data latency for various use cases. Is the data streaming in real-time, or is it batch-oriented? Knowing this will help you select a tool to handle your specific data throughput.

Determine the extent of data transformation logic and preparation required to make the data usable for analytics or reporting. Some data integration tools offer extensive transformation capabilities, while others are more limited. Knowing your transformation needs will help you choose a tool that can provide a comprehensive set of transformation functions to clean, enrich, and structure data as needed.

Consider the data warehouse, data lake, and analytical tools and platforms (e.g., BI tools, data visualization tools) that will consume the integrated data. Ensure that data pipelines are designed to support these tools seamlessly. Data engineers can establish a consistent and standardized way for analysts and line-of-business users to access and analyze data.

There are different approaches to data integration. Selecting the right one depends on your organization’s needs and existing infrastructure.

Consider whether your organization requires batch processing or real-time data integration—they are two distinct approaches to moving and processing data. Batch processing is suitable for scenarios like historical data analysis where immediate insights are not critical and data updates can happen periodically, while real-time integration is essential for applications and use cases like Internet of Things (IoT) that demand up-to-the-minute data insights.

Determine whether your data integration needs are primarily on-premises or in the cloud. On-premises data integration involves managing data and infrastructure within an organization’s own data centers or physical facilities, whereas cloud data integration relies on cloud service providers’ infrastructure to store and process data. Some tools specialize in on-premises data integration, while others are built for the cloud or hybrid environments. Choose a tool that depends on factors such as data volume, scalability requirements, cost considerations, and data residency requirements.

Many organizations have a hybrid infrastructure, with data both on-premises and in the cloud. Hybrid integration provides flexibility to scale resources as needed, using cloud resources for scalability while maintaining on-premises infrastructure for specific workloads. In such cases, consider a hybrid data integration and data quality tool like Actian’s DataConnect or the Actian Data Platform to seamlessly bridge both environments and ensure smooth data flow to support a variety of operational and analytical use cases.

As you evaluate ETL tools, consider the following features and capabilities:

Ensure that the tool can easily connect to your various data sources and destinations, including relational databases, SaaS applications, data warehouses, and data lakes. Native ETL connectors provide direct, seamless access to the latest version of data sources and destinations without the need for custom development. As data volumes grow, native connectors can often scale seamlessly, taking advantage of the underlying infrastructure’s capabilities. This ensures that data pipelines remain performant even with increasing data loads. If you have an outlier data source, look for a vendor that provides Import API, webhooks, or custom source development.

Check if the tool can scale with your organization’s growing data needs. Performance is crucial, especially for large-scale data integration tasks. Inefficient data pipelines with high latency may result in underutilization of computational resources because systems may spend more time waiting for data than processing it. An ETL tool that supports parallel processing can handle large volumes of data efficiently. It can also scale easily to accommodate growing data needs. Data latency is a critical consideration for data engineers, because it directly impacts the timeliness, accuracy, and utility of data for analytics and decision-making.

Evaluate the tool’s data transformation capabilities to handle unique business rules. It should provide the necessary functions for cleaning, enriching, and structuring raw data to make it suitable for analysis, reporting, and other downstream applications. The specific transformations required can include: data deduplication, formatting, aggregation, normalization etc., depending on the nature of the data, the objectives of the data project, and the tools and technologies used in the data engineering pipeline.

A robust monitoring and error-handling system is essential for tracking data quality over time. The tool should include data quality checks and validation mechanisms to ensure that incoming data meets predefined quality standards. This is essential for maintaining data integrity and accuracy, and it directly impacts the accuracy, reliability, and effectiveness of analytic initiatives. High quality data builds trust in analytical findings among stakeholders. When data is trustworthy, decision-makers are more likely to rely on the insights generated from analytics. Data quality is also an integral part of data governance practices.

Ensure that the tool offers robust security features to protect your data during transit and at rest. Features such as SSH tunneling and VPNs provide encrypted communication channels, ensuring the confidentiality and integrity of data during transit. It should also help you comply with data privacy regulations, such as GDPR or HIPAA.

Consider the tool’s ease of use and deployment. A user-friendly low-code interface can boost productivity, save time, and reduce the learning curve for your team, especially for citizen integrators that can come from anywhere within the organization. A marketing manager, for example, may want to integrate web traffic, email marketing, ad platform, and customer relationship management (CRM) data into a data warehouse for attribution analysis.

Assess the level of support, response times, and service-level agreements (SLAs) provided by the vendor. Do they offer comprehensive documentation, training resources, and responsive customer support? Additionally, consider the size and activity of the tool’s user community, which can be a valuable resource for troubleshooting and sharing best practices.

A fully managed hybrid solution like Actian simplifies complex data integration challenges and gives you the flexibility to adapt to evolving data integration needs.

The best way for data engineers to get started is to start a free trial of the Actian Data Platform. From there, they can load their own data and explore what’s possible within the platform. You can also book a demo to see how Actian can help automate data pipelines in a robust, scalable, price-performant way.

For a comprehensive guide to evaluating and selecting the right Data Integration tool, download the ebook Data Engineering Guide: Nine Steps to Select the Right Data Integration Tool.

The post The Data Engineering Decision Guide to Data Integration Tools appeared first on Actian.

Read More

Author: Dee Radh

As businesses continue to tap into ever-expanding data sources and integrate growing volumes of data, they need a solid data management strategy that keeps pace with their needs. Similarly, they need database management tools that meet their current and emerging data requirements.

The various tools can serve different user groups, including database administrators (DBAs), business users, data analysts, and data scientists. They can serve a range of uses too, such as allowing organizations to integrate, store, and use their data, while following governance policies and best practices. The tools can be grouped into categories based on their role, capabilities, or proprietary status.

For example, one category is open-source tools, such as PostgreSQL or pgAdmin. Another category is tools that manage an SQL infrastructure, such as Microsoft’s SQL Server Management Studio, while another is tools that manage extract, transform, and load (ETL) and extract, load, and transform (ELT) processes, such as those natively available from Actian.

Using a broad description, database management tools can ultimately include any tool that touches the data. This covers any tool that moves, ingests, or transforms data, or performs business intelligence or data analytics.

Today’s data users require tools that meet a variety of needs. Some of the more common needs that are foundational to optimizing data and necessitate modern capabilities include:

It’s important to realize that needs can change over time as business priorities, data usage, and technologies evolve. That means a cutting-edge tool from 2020, for example, that offered new capabilities and reduced time to value may already be outdated by 2024. When using an existing tool, it’s important to implement new versions and upgrades as they become available.

You also want to ensure you continue to see a strong return on investment in your tools. If you’re not, it may make more sense from a productivity and cost perspective to switch to a new tool that better meets your needs.

The mark of a good database management tool—and a good data platform—is the ability to ensure data is easy-to-use and readily accessible to everyone in the organization who needs it. Tools that make data processes, including analytics and business intelligence, more ubiquitous offer a much-needed benefit to data-driven organizations that want to encourage data usage for everyone, regardless of their skill level.

All database management tools should enable a broad set of users—allowing them to utilize data without relying on IT help. Another consideration is how well a tool integrates with your existing database, data platform, or data analytics ecosystem.

Many database management tool vendors and independent software vendors (ISVs) may have 20 to 30 developers and engineers on staff. These companies may provide only a single tool. Granted, that tool is probably very good at what it does, with the vendor offering professional services and various features for it. The downside is that the tool is not natively part of a data platform or larger data ecosystem, so integration is a must.

By contrast, tools that are provided by the database or platform vendor ensure seamless integration and streamline the number of vendors that are being used. You also want to use tools from vendors that regularly offer updates and new releases to deliver new or enhanced capabilities.

If you have a single data platform that offers the tools and interfaces you need, you can mitigate the potential friction that oftentimes exists when several different vendor technologies are brought together, but don’t easily integrate or share data. There’s also the danger of a small company going out of business and being unable to provide ongoing support, which is why using tools from large, established vendors can be a plus.

The goal of database management tools is to solve data problems and simplify data management, ideally with high performance and at a favorable cost. Some database management tools can perform several tasks by offering multiple capabilities, such as enabling data integration and data quality. Other tools have a single function.

Tools that can serve multiple use cases have an advantage over those that don’t, but that’s not the entire story. A tool that can perform a job faster than others, automate processes, and eliminate steps in a job that previously required manual intervention or IT help offers a clear advantage, even if it only handles a single use case. Stakeholders have to decide if the cost, performance, and usability of a single-purpose tool delivers a value that makes it a better choice than a multi-purpose tool.

Business users and data analysts often prefer the tools they’re familiar with and are sometimes reluctant to change, especially if there’s a long learning curve. Switching tools is a big decision that involves both cost and learning how to optimize the tool.

If you put yourself in the shoes of a chief data officer, you want to make sure the tool delivers strong value, integrates into and expands the current environment, meets the needs of internal users, and offers a compelling reason to make a change. You also should put yourself in the shoes of DBAs—does the tool help them do their job better and faster?

Tool choices can be influenced by no-code, low-code, and pro-code environments. For example, some data leaders may choose no- or low-code tools because they have small teams that don’t have the time or skill set needed to work with pro-code tools. Others may prefer the customization and flexibility options offered by pro-code tools.

A benefit of using the Actian Data Platform is that we offer database management tools to meet the needs of all types of users at all skill levels. We make it easy to integrate tools and access data. The Actian Platform offers no-code, low-code, and pro-code integration and transformation options. Plus, the unified platform’s native integration capabilities and data quality services feature a robust set of tools essential for data management and data preparation.

Plus, Actian has a robust partner ecosystem to deliver extended value with additional products, tools, and technologies. This gives customers flexibility in choosing tools and capabilities because Actian is not a single product company. Instead, we offer products and services to meet a growing range of data and analytics use cases for modern organizations.

Experience the Actian Data Platform for yourself. Take a free 30-day trial.

The post Top Capabilities to Look for in Database Management Tools appeared first on Actian.

Read More

Author: Derek Comingore

In part one of this blog post, we described why there are many challenges for developers of data pipeline testing tools (complexities of technologies, large variety of data structures and formats, and the need to support diverse CI/CD pipelines). More than 15 distinct categories of test tools that pipeline developers need were described. Part two delves […]

The post Choosing Tools for Data Pipeline Test Automation (Part 2) appeared first on DATAVERSITY.

Read More

Author: Wayne Yaddow

For most companies, a mixture of both on-premises and cloud environments called hybrid cloud is becoming the norm. This is the second blog in a two-part series describing data management strategies that businesses and IT need to be successful in their new hybrid cloud world. The previous post covered hybrid cloud data management, data residency, and compliance.

There are essential components for enabling hybrid cloud data analytics. First, you need data integration that can access data from all data sources. Your data integration tool needs a high degree of data quality management and transformation to convert raw data into a validated and usable format. Second, you should have the ability to orchestrate pipelines to coordinate and manage integration processes in a systematic and automated way. Third, you need a consistent data fabric layer that can be deployed across all environments and clouds to guarantee interoperability, consistency, and performance. The data fabric layer must have the ability to ingest different types of data as well. Last, you’ll need to transform data into formats and orchestrate pipelines.

There are several costs to consider for hybrid cloud such as licensing, hardware, administration, and staff skill sets. Software as a Service (SaaS) and public cloud services tend to be subscription-based consumption models that are an Operational Expense (Opex). While on-premises and private cloud deployments are generally software licensing agreements that are a Capital Expenditure (Capex), subscription software models are great for starting small, but the costs can increase quickly. Alternatively, the upfront cost for traditional software is larger but your costs are generally fixed, pending growth.

Beyond software and licensing costs, scalability is a factor. Cloud services and SaaS offerings provide on-demand scale. Whereas on-premises deployments and products can also scale to a certain point, but eventually may require additional hardware (scale-up) and additional nodes (scale-out). Additionally, these deployments often need costly over-provisioning to meet peak demand.

For proprietary and high-risk data assets, leveraging on-premises deployments tends to be a consistent choice for obvious reasons. You have full control of managing the environment. It is worth noting that your technical staff needs to have strong security skills to protect on-premises data assets. On-premises environments rarely need infinite scale and sensitive data assets have minimal year-over-year growth. For low and medium-risk data assets, leveraging public cloud environments is quite common including multi-cloud topologies. Typically, these data assets are more varied in nature and larger in volume which makes them ideal for the cloud. You can leverage public cloud services and SaaS offerings to process, store, and query these assets. Utilizing multi-cloud strategies can provide additional benefits for higher SLA environments and disaster recovery use cases.

The Actian Data Platform is a hybrid and multi-cloud data platform for today’s modern data management requirements. The Actian platform provides a universal data fabric for all modern computing environments. Data engineers leverage a low-code and no-code set of data integration tools to process and transform data across environments. The data platform provides a modern and highly efficient data warehouse service that scales on-demand or manually using a scheduler. Data engineers and administrators can configure idle sleep and shutdown procedures as well. This feature is critical as it greatly reduces cloud data management costs and resource consumption.

The Actian platform supports popular third-party data integration tools leveraging standard ODBC and JDBC connectivity. Data scientists and analysts are empowered to use popular third-party data science and business intelligence tool sets with standard connectivity options. It also contains best-in-class security features to support and assist with regulatory compliance. In addition to that, the data platform’s key security features include management and data plane network isolation, industry-grade encryption, including at-rest and in-flight, IP allow lists, and modern access controls. Customers can easily customize Actian Data Platform deployments based on their unique security requirements.

The Actian Data Platform components are fully managed services when run in public cloud environments and self-managed when deployed on-premises, giving you the best of both worlds. Additionally, we are bringing to market a transactional database as a service component to provide additional value across the data management spectrum for our valued customers. The result is a highly scalable and consumable, consistent data fabric for modern hybrid cloud analytics.

The post Data Management for a Hybrid World: Platform Components and Scalability appeared first on Actian.

Read More

Author: Derek Comingore

In an era where data stands as the driving force behind the sweeping wave of digital transformation and GenAI initiatives, FileOps is emerging as a true game-changer. Defined as a low-code/no-code methodology for performing and streamlining file operations, FileOps enables organizations to expedite their digital transformation and GenAI initiatives by empowering them to effectively manage […]

The post How Low-Code FileOps Ensures a Seamless Digital Transformation appeared first on DATAVERSITY.

Read More

Author: Milind Chitgupakar

We are pleased to announce the general availability of DataConnect 12.2. This release furthers our commitment to making it easy for our integration customers to connect, transform, and analyze data. With this release, customers will now have a faster path to the cloud using the Actian Data Platform to deploy existing integrations to cloud-based environments.

Highlights of the release include:

Previously, customers would need to deploy integrations to the Actian Data Platform individually. Customers can now take existing, or new .djars (projects/workflows) and deploy them to Integration Manager in bulk. This allows designers to expose integrations faster in cloud environments and applications.

The EZScript debugger makes designing and validating maps and processes that leverage EZScript much easier. Users can set breakpoints and test desired or expected behavior of EZScripts. Now all the debugging can be done in line with the DataConnect Studio editor. Engine execution pauses based on the breakpoints the user sets and values are displayed for any variable, macro, or object.

This provides the ability to log into Integration Manager from the DataConnect Studio, browse existing configurations, and download the .djar and project files to the DataConnect studio if desired. Users have the option to explode the .djar files and import all the contents to a new project. This benefits clients that need to troubleshoot, edit, or extend integrations in Integration Manager.

Provides detailed information about errors, suggestions on error resolution, as well as links to the Actian community posts and relevant help topics.

For macros, Process Designer, message objects, and browsing. Macros can be created, edited, and accessed inline using DataConnect Studio and saved for reuse in the macro library.

Profile data to ensure data quality, including the ability to perform fuzzy matching across multiple fields in a dataset to identify duplicate records.

For customers on version 9 or later – full backward compatibility.

Click here to access the full release notes.

The post Introducing DataConnect 12.2 appeared first on Actian.

Read More

Author: Chris Gilson

Ensuring a hassle-free cloud migration takes a lot of planning and working with the right vendor. While you have specific goals that you want to achieve by moving to the cloud, you can also benefit the business by thinking about how you want to expand and optimize the cloud once you’ve migrated. For example, the cloud journey can be the optimal time to modernize your data and analytics.

Organizations are turning to the cloud for a variety of reasons, such as gaining scalability, accelerating innovation, and integrating data from traditional and new sources. While there’s a lot of talk about the benefits of the cloud—and there are certainly many advantages—it’s also important to realize that challenges can occur both during and after migration.

New research based on surveys of 450 business and IT leaders identified some of the common data and analytics challenges organizations face when migrating to the cloud. They include data privacy, regulatory compliance, ethical data use concerns, and the ability to scale.

One way you can solve these challenges is to deploy a modern cloud data platform that can deliver data integration, scalability, and advanced analytics capabilities. The right platform can also solve another common problem you might experience in your cloud migration—operationalizing as you add more data sources, data pipelines, and analytics use cases.

You need the ability to quickly add new data sources, build pipelines with or without using code, perform analytics at scale, and meet other business needs in a cloud or hybrid environment. A cloud data platform can deliver these capabilities, along with enabling you to easily manage, access, and use data—without ongoing IT assistance.

Yesterday’s analytics approaches won’t deliver the rapid insights you need for today’s advanced automation, most informed decision-making, and the ability to identify emerging trends as they happen to shape product and service offerings. That’s one reason why real-time data analytics is becoming more mainstream.

According to research conducted for Actian, common technologies operational in the cloud include data streaming and real-time analytics, data security and privacy, and data integration. Deploying these capabilities with an experienced cloud data platform vendor can help you avoid problems that other organizations routinely face, such as cloud migrations that don’t meet established objectives or not having transparency into costs, resulting in budget overruns.

Vendor assessments are also important. Companies evaluating vendors often look at the functionality and capabilities offered, the business understanding and personalization of the sales process, and IT efficiency and user experience. A vendor handling your cloud migration should help you deploy the environment that’s best for your business, such as a multi-cloud or hybrid approach, without being locked into a specific cloud service provider.

Once organizations are in the cloud, they are implementing a variety of use cases. The most popular ones, according to research for Actian, include customer 360 and customer analytics, financial risk management, and supply chain and inventory optimization. With a modern cloud data platform, you can bring almost any use case to the cloud.

Moving to the cloud can help you modernize both the business and IT. As highlighted in our new eBook “The Top Data and Analytics Capabilities Every Modern Business Should Have,” your cloud migration journey is an opportunity to optimize and expand the use of data and analytics in the cloud. The Avalanche Cloud Data Platform can help. The platform makes it easy for you to connect, manage, and analyze data in the cloud. It also offers superior price performance, and you can use your preferred tools and languages to get answers from your data. Read the eBook to find out more about our research, the top challenges organizations face with cloud migrations, and how to eliminate IT bottlenecks. You’ll also find out how your peers are using cloud platforms for analytics and the best practices for smooth cloud migration.

Related resources you may find useful:

The post How to Use Cloud Migration as an Opportunity to Modernize Data and Analytics appeared first on Actian.

Read More

Author: Brett Martin

If you haven’t already seen Astrid Eira’s article in FinancesOnline, “14 Supply Chain Trends for 2022/2023: New Predictions To Watch Out For”, I highly recommend it for insights into current supply chain developments and challenges. Eira identifies analytics as the top technology priority in the supply chain industry, with 62% of organizations reporting limited visibility. Here are some of Eira’s trends related to supply chain analytics use cases and how the Avalanche Cloud Data Platform provides the modern foundation needed to make it easier to support complex supply chain analytics requirements.

Supply Chain Sustainability

According to Eira, companies are expected to make their supply chains more eco-friendly. This means that companies will need to leverage supplier data and transportation data, and more in real-time to enhance their environmental, social and governance (ESG) efforts. With better visibility into buildings, transportation, and production equipment, not only can businesses build a more sustainable chain, but they can also realize significant cost savings through greater efficiency.

With built-in integration, management and analytics, the Avalanche Cloud Data Platform helps companies easily aggregate and analyze massive amounts of supply chain data to gain data-driven insights for optimizing their ESG initiatives.

The Supply Chain Control Tower

Eira believes that the supply chain control tower will become more important as companies adopt Supply Chain as a Service (SCaaS) and outsource more supply chain functions. As a result, smaller in-house teams will need the assistance of a supply chain control tower to provide an end-to-end view of the supply chain. A control tower captures real-time operational data from across the supply chain to improve decision making.

The Avalanche platform helps deliver this end-to-end visibility. It can serve as a single source of truth from sourcing to delivery for all supply chain partners. Users can see and adapt to changing demand and supply scenarios across the world and resolve critical issues in real time. In addition to fast information delivery using the cloud, the Avalanche Cloud Data Platform can embed analytics within day-to-day supply chain management tools and applications to deliver data in the right context, allowing the supply chain management team to make better decisions faster.

Edge to Cloud

Eira also points out the increasing use of Internet of Things (IoT) technology in the supply chain to track shipments and deliveries, provide visibility into production and maintenance, and spot equipment problems faster. These IoT trends indicate the need for edge to cloud where data is generated at the edge, stored, processed, and analyzed in the cloud.

The Avalanche Cloud Data Platform is uniquely capable of delivering comprehensive edge to cloud capabilities in a single solution. It includes Zen, an embedded database suited to applications that run on edge devices, with zero administration and small footprint requirements. The Avalanche Cloud Data Platform transforms, orchestrates, and stores Zen data for analysis.

Artificial Intelligence

Another trend Eira discusses is the growing use of artificial intelligence (AI) for supply chain automation. For example, companies use predictive analytics to forecast demand based on historical data. This helps them adjust production, inventory levels, and improve sales and operations planning processes.

The Avalanche Cloud Data Platform is ideally suited for AI with the following capabilities:

This discussion of supply chain sustainability, the supply chain control tower, edge to cloud, and AI just scratch the surface of what’s possible with supply chain analytics. To learn more about how the Avalanche Cloud Data Platform, contact our data analytics experts. Here’s some additional material if you would like to learn more:

· The Power of Real-time Supply Chain Analytics

The post Data Analytics for Supply Chain Managers appeared first on Actian.

Read More

Author: Teresa Wingfield