Understanding Structural Metadata

Today, organizations and individuals face an ever-growing challenge: the sheer volume of data being generated and stored across various systems. This data needs to be properly organized, categorized, and made easily accessible for efficient decision-making. One critical aspect of organizing data is through the use of metadata, which serves as a descriptive layer that helps users understand, find, and utilize data effectively.

Among the various types of metadata, structural metadata plays a crucial role in facilitating improved data management and discovery. This article will define what structural metadata is, why it is useful, and how the Actian Data Intelligence Platform can help organizations better organize and manage their structural metadata to enhance data discovery.

What is Structural Metadata?

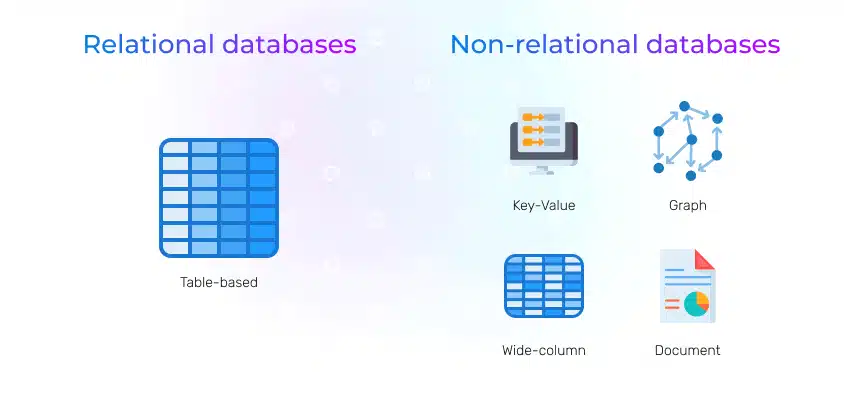

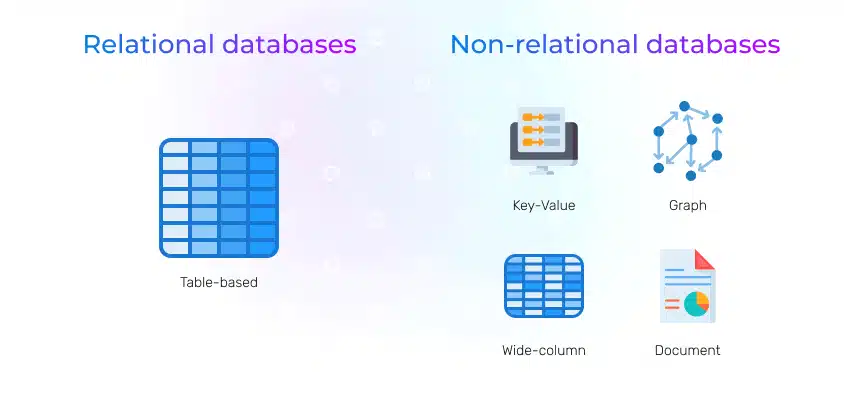

Metadata is often classified into various types, such as descriptive metadata, administrative metadata, and structural metadata. While descriptive metadata provides basic information about the data (e.g., title, author, keywords), and administrative metadata focuses on the management and lifecycle of data (e.g., creation date, file size, permissions), structural metadata refers to the organizational elements that describe how data is structured within a dataset or system.

In simpler terms, structural metadata defines the relationships between the different components of a dataset. It provides the blueprint for how data is organized, linked, and formatted, making it easier for users to navigate complex datasets. In a relational database, for example, structural metadata would define how tables, rows, columns, and relationships between entities are arranged. In a document repository, it could describe the format and organization of files, such as chapters, sections, and subsections.

Key Features of Structural Metadata

Here are some key aspects of structural metadata:

- Data Relationships: Structural metadata defines the relationships between data elements or files within a dataset. For instance, in a relational database, it describes how tables are linked through keys or indexes, and how columns relate to each other within the same table.

- Data Formats and Types: It specifies the data formats used (e.g., text, numeric, date) and helps identify the data types of each element. In data warehousing, structural metadata defines the schema, such as whether data is stored in star or snowflake schema.

- Hierarchical Organization: It outlines how data is organized hierarchically or sequentially, such as parent-child relationships between datasets or subfolders within a directory structure.

- Data Integrity and Constraints: Structural metadata often includes information on constraints like field lengths, data validation rules, and referential integrity, ensuring that the data is consistent and follows predefined rules.

- Access and Navigation: This metadata helps users understand how to access and navigate large datasets by providing information about where and how data is located, allowing for efficient querying and retrieval.

Why is Structural Metadata Important?

Structural metadata plays a fundamental role in ensuring that data is understandable, accessible, and usable. Here are several reasons why it is essential:

- Data Discovery and Access: Structural metadata enables users to locate and understand data more efficiently. By understanding how data is organized and the relationships that exist between various components, users can easily navigate large datasets to find relevant information without having to sift through each individual data element.

- Data Quality and Consistency: When the structure of a dataset is clearly defined through metadata, it ensures that data is consistently formatted and follows specific rules. This consistency helps maintain data quality and reliability, which is crucial for analysis and decision-making.

- Improved Data Integration: Organizations often deal with data spread across different systems, platforms, and applications. Structural metadata facilitates integration by defining how disparate data sources are connected or how they should interact. It helps in joining datasets correctly, enabling better cross-platform analytics.

- Data Governance and Compliance: In regulated industries, where data must meet specific legal or industry standards, structural metadata ensures that data complies with necessary rules and regulations. It makes audits, data governance practices, and compliance checks easier and more transparent.

- Efficient Querying and Analytics: In analytics and business intelligence tools, having a well-structured dataset enables more efficient querying of data. Structural metadata allows for faster retrieval of data, helping analysts and business users generate insights quickly and accurately.

- Enhanced Data Management: By providing clear definitions of data formats, relationships, and constraints, structural metadata streamlines the process of managing, updating, and maintaining datasets. It reduces errors and minimizes the risk of misinterpreting data, which can lead to faulty conclusions.

Challenges of Managing Structural Metadata

Despite its importance, managing structural metadata is not without challenges.

- Complexity: As datasets grow and become more complex, it can be difficult to keep track of all the different relationships, hierarchies, and formats. Large organizations may struggle to maintain a consistent structure across numerous datasets.

- Data Silos: In many organizations, data is stored in separate systems or applications, each with its own metadata standards. This can create silos where data is not easily discoverable or usable across departments or platforms.

- Lack of Standardization: Without a standardized approach to metadata management, organizations may struggle to consistently define structural metadata across their datasets. This inconsistency can lead to confusion and errors, hindering data integration and analysis efforts.

- Scalability: As organizations continue to generate more data, the challenge of managing structural metadata at scale becomes more pronounced. This requires robust tools and systems capable of handling increasing volumes of metadata efficiently.

How Actian Can Help Organize and Manage Structural Metadata for Better Data Discovery

The Actian Data Intelligence Platform provides organizations with the tools to handle their metadata efficiently. By enabling centralized metadata management, organizations can easily catalog and manage structural metadata, thereby enhancing data discovery and improving overall data governance. Here’s how the platform can help:

1. Centralized Metadata Repository

The Actian Data Intelligent Platform allows organizations to centralize all metadata, including structural metadata, into a single, unified repository. This centralization makes it easier to manage, search, and access data across different systems and platforms. No matter where the data resides, users can access the metadata and understand how datasets are structured, enabling faster data discovery.

2. Automated Metadata Ingestion

The platform supports the automated ingestion of metadata from a wide range of data sources, including databases, data lakes, and cloud storage platforms. This automation reduces the manual effort required to capture and maintain metadata, ensuring that structural metadata is always up to date and accurately reflects the structure of the underlying datasets.

3. Data Lineage and Relationships

With Actian’s platform, organizations can visualize data lineage and track the relationships between different data elements. This feature allows users to see how data flows through various systems and how different datasets are connected. By understanding these relationships, users can better navigate complex datasets and conduct more meaningful analyses.

4. Data Classification and Tagging

The Actian Data Intelligence Platform provides powerful data classification and tagging capabilities that allow organizations to categorize data based on its structure, type, and other metadata attributes. This helps users quickly identify the types of data they are working with and make more informed decisions about how to query and analyze it.

5. Searchable Metadata Catalog

The platform’s metadata catalog enables users to easily search and find datasets based on specific structural attributes. Whether looking for datasets by schema, data format, or relationships, users can quickly pinpoint relevant data, which speeds up the data discovery process and improves overall efficiency.

6. Collaboration and Transparency

Actian’s platform fosters collaboration by providing a platform where users can share insights, metadata definitions, and best practices. This transparency ensures that everyone in the organization is on the same page when it comes to understanding the structure of data, which is essential for data governance and compliance.

7. Data Governance and Compliance

Using a federated knowledge graph, organizations can automatically identify, classify, and track data assets based on contextual and semantic factors. This makes it easier to map assets to key business concepts, manage regulatory compliance, and mitigate risks.

Get a Tour of the Actian Data Intelligence Platform Today

Managing and organizing metadata is more important than ever in the current technological climate. Structural metadata plays a crucial role in ensuring that datasets are organized, understandable, and accessible. By defining the relationships, formats, and hierarchies of data, structural metadata enables better data discovery, integration, and analysis.

However, managing this metadata can be a complex and challenging task, especially as datasets grow and become more fragmented. That’s where the Actian Data Intelligence Platform comes in. With Actian’s support, organizations can unlock the full potential of their data, streamline their data management processes, and ensure that their data governance practices are aligned with industry standards, all while improving efficiency and collaboration across teams.

Take a tour of the Actian Data Intelligence Platform or sign up for a personalized demonstration today.

The post Understanding Structural Metadata appeared first on Actian.

Read More

Author: Actian Corporation