The rise of artificial intelligence (AI) has sparked a heated debate about the future of jobs across various industries. Data analysts, in particular, find themselves at the heart of this conversation. Will AI render human data analysts obsolete?

Contrary to the doomsayers’ predictions, the future is not bleak for data analysts. In fact, AI will empower data analysts to thrive, enhancing their ability to provide more insightful and impactful business decisions. Let’s explore how AI, and specifically large language models (LLMs), can work in tandem with data analysts to unlock new levels of value in data and analytics.

The Role of Data Analysts: More Than Number Crunching

First, it’s essential to understand that the role of a data analyst extends far beyond mere number crunching. Data analysts are storytellers, translating complex data into actionable insights that all decision makers can easily understand. They possess the critical thinking skills to ask the right questions, interpret results within the context of business objectives, and communicate findings effectively to stakeholders. While AI excels at processing vast amounts of data and identifying patterns, it lacks the nuanced understanding of business context and the ability to interpret data that are essential capabilities unique to human analysts.

AI as an Empowering Tool, Not a Replacement

Automating Routine Tasks

AI can automate many routine and repetitive tasks that occupy a significant portion of a data analyst’s time. Data cleaning, integration, and basic statistical analysis can be streamlined using AI, freeing analysts to focus on more complex and value-added activities. For example, AI-powered tools can quickly identify and correct data inconsistencies, handle missing values, and perform preliminary data exploration. This automation increases efficiency and allows analysts to delve deeper into data interpretation and strategic analysis.

Enhancing Analytical Capabilities

AI and machine learning algorithms can augment the analytical capabilities of data analysts. These technologies can uncover hidden patterns, detect anomalies, and predict future trends with greater accuracy and speed than legacy approaches. Analysts can use these advanced insights as a foundation for their analysis, adding their expertise and business acumen to provide context and relevance. For instance, AI can identify a subtle trend in customer behavior, which an analyst can then explore further to understand underlying causes and implications for marketing strategies.

Democratizing Data Insights

Large language models (LLMs), such as GPT-4, can democratize access to data insights by enabling non-technical stakeholders to interact with data in natural language. LLMs can interpret complex queries and generate understandable explanations very quickly, making data insights more accessible to everyone within an organization. This capability enhances collaboration between data analysts and business teams, fostering a data-driven culture where decisions are informed by insights derived from both human and AI analysis.

How LLMs Can Be Used in Data and Analytics Processes

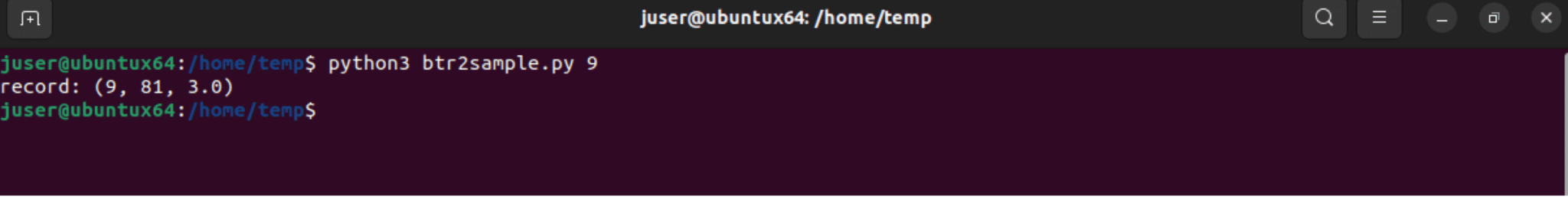

Natural Language Processing (NLP) for Data Querying

LLMs can simplify data querying through natural language processing (NLP). Instead of writing complex SQL queries, analysts and business users can ask questions in plain English. For example, a user might ask, “What were our top-selling products last quarter?” and the LLM can translate this query into the necessary database commands and retrieve the relevant data. This capability lowers the barrier to entry for data analysis, making it more accessible and efficient.

Automated Report Generation

LLMs can assist in generating reports by summarizing key insights from data and creating narratives around them. Analysts can use these auto generated reports as a starting point, refining and adding their insights to produce comprehensive and insightful business reports. This collaboration between AI and analysts ensures that reports are both data-rich and contextually relevant.

Enhanced Data Visualization

LLMs can enhance data visualization by interpreting data and providing textual explanations. For instance, when presenting a complex graph or chart, the LLM can generate accompanying text that explains the key takeaways and trends in the data. This feature helps bridge the gap between data visualization and interpretation, making it easier for stakeholders to understand and act on the insights.

The Human Element: Context, Ethics, and Interpretation

Despite the advancements in AI, the human element remains irreplaceable in data analysis. Analysts bring context, ethical considerations, and nuanced interpretation to the table. They understand the business environment, can ask probing questions, and can foresee the potential impact of data-driven decisions on various areas of the business. Moreover, analysts are crucial in ensuring that data usage adheres to ethical standards and regulatory requirements, areas where AI still has limitations.

Contextual Understanding

AI might identify a correlation, but it takes a human analyst to understand whether the correlation is meaningful and relevant to the business. Analysts can discern whether a trend is due to a seasonal pattern, a market anomaly, or a fundamental change in consumer behavior, providing depth to the analysis that AI alone cannot achieve.

Ethical Oversight

AI systems can inadvertently perpetuate biases present in the data they are trained on. Data analysts play a vital role in identifying and mitigating these biases, ensuring that the insights generated are fair and ethical. They can scrutinize AI-generated models and results, applying their judgment to avoid unintended consequences.

Strategic Decision-Making

Ultimately, data analysts are instrumental in strategic decision-making. They can synthesize insights from multiple data sources, apply their industry knowledge, and recommend actionable strategies. This strategic input is crucial for aligning data insights with business goals and driving impactful decisions.

The End Game: A Symbiotic Relationship

The future of data analysis is not a zero-sum game between AI and human analysts. Instead, it is a symbiotic relationship where each complements the other. AI, with its ability to process and analyze data at unprecedented scale, enhances the capabilities of data analysts. Analysts, with their contextual understanding, critical thinking, and ethical oversight, ensure that AI-driven insights are relevant, accurate, and actionable.

By embracing AI as a tool rather than a threat, data analysts can unlock new levels of productivity and insight, driving smarter business decisions and better outcomes. In this collaborative future, data analysts will not only survive but thrive, leveraging AI to amplify their impact and solidify their role as indispensable assets in the data-driven business landscape.

The post Will AI Take Data Analyst Jobs? appeared first on Actian.